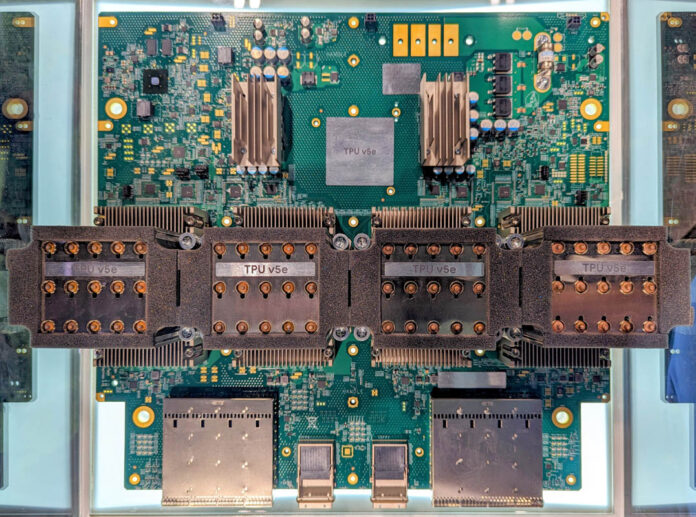

Artificial intelligence (AI) is evolving at a breathtaking pace, and the hardware that powers it is keeping stride. Google’s latest innovation, the TPU v5e, takes AI computing to the next level. Designed for scalability, efficiency, and versatility, this next-gen Tensor Processing Unit is shaping the future of machine learning (ML) and large-scale AI applications. Let’s dive into what makes the TPU v5e a game-changer and why it matters for developers, researchers, and businesses.

What Is a TPU?

Before we delve into the specifics of the TPU v5e, let’s recap what TPUs are all about. A Tensor Processing Unit (TPU) is a custom-built chip designed by Google to accelerate the training and inference of ML models. Unlike traditional CPUs or GPUs, TPUs are optimized for the high-volume matrix multiplications and tensor operations that power neural networks. They’re used in tasks ranging from image recognition to natural language processing and even large language models (LLMs) like GPT and BERT.

The TPU v5e: What’s New?

The TPU v5e introduces several advancements that make it stand out in the world of AI hardware:

- Unmatched Performance: Each TPU v5e chip delivers up to 197 teraflops of performance, enabling rapid training and inference for complex ML models.

- Enhanced Memory Capacity: With 16 GB of high-bandwidth memory (HBM) per chip, the TPU v5e can handle data-intensive tasks without bottlenecks.

- Scalability at Its Core: TPUs can be connected in pods of up to 256 chips, offering exponential computing power for training massive AI models or running large-scale ML workloads.

- Energy Efficiency: The TPU v5e balances power consumption with high performance, making it a cost-effective solution for businesses.

Integration with Google Cloud

The TPU v5e isn’t just powerful—it’s also highly accessible through Google Cloud Platform (GCP). This integration enables developers to:

- Use TPUs with popular frameworks like TensorFlow, PyTorch, and JAX.

- Deploy large-scale ML workloads on managed services like Vertex AI and Google Kubernetes Engine (GKE).

- Scale seamlessly as their computing needs grow.

This level of accessibility is a boon for startups, researchers, and enterprises alike, democratizing the power of cutting-edge AI hardware.

Who Benefits from the TPU v5e?

The TPU v5e is a versatile tool with applications across industries:

- AI Researchers: Accelerate groundbreaking research in deep learning, reinforcement learning, and generative AI.

- Enterprises: Optimize business processes with AI-powered insights, from demand forecasting to customer sentiment analysis.

- Developers: Build and deploy cutting-edge AI solutions faster, thanks to the TPU v5e’s seamless integration with popular tools.

Google’s Vision: Beyond TPU v5e

Google’s advancements in AI hardware don’t stop with the TPU v5e. The company’s recent introduction of the Trillium AI accelerator chip demonstrates its commitment to staying ahead of the curve. Designed to compete with Nvidia’s dominance, Trillium offers exceptional performance and efficiency, setting the stage for the next generation of AI applications.

Why It Matters

The TPU v5e represents a significant step forward in AI hardware, offering:

- Faster time-to-results for AI and ML projects.

- Cost savings through energy-efficient operation.

- Scalability to handle today’s increasingly complex AI models.

For businesses, developers, and researchers, the TPU v5e isn’t just a tool—it’s a gateway to the future of AI.

Final Thoughts

As the demand for AI continues to grow, so does the need for hardware that can keep up. Google’s TPU v5e delivers the performance, scalability, and efficiency required to power the AI revolution. Whether you’re a researcher training the next breakthrough model or a developer deploying innovative AI applications, the TPU v5e is built to meet your needs. And with Google’s ongoing commitment to innovation, the future of AI hardware has never looked brighter.